Algorithm development requires high data quality and data scientists, who understand the business

Peter Riisberg – our general manager of west Denmark and principal consultant – feels strongly about data quality and business value.

Good data are satisfying, and it is possible to ensure good data quality at the source, which Peter recommends to his clients all the time. In this age of data- and algorithm-driven insights, ensuring data quality is more important than ever before – also for the sake of the algorithms.

But to be really successful, you need to get the data scientists involved in the core business to ensure, that a developed algorithm creates business value, when it is implemented.

How you build an algorithm

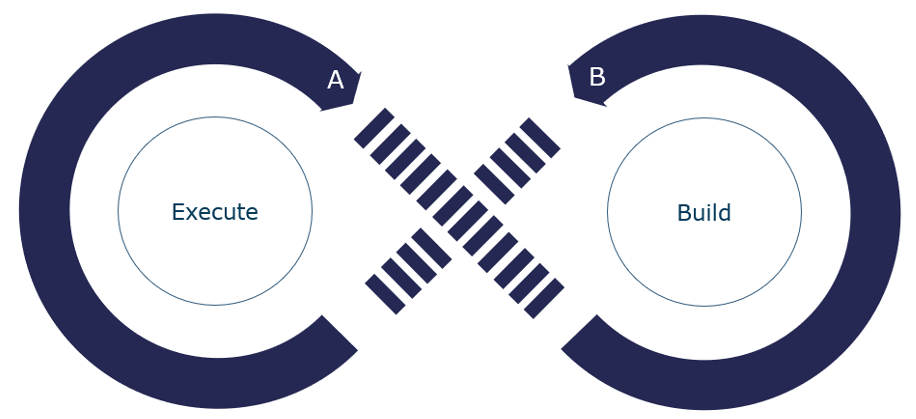

Building and algorithm are two intertwined processes.

- Your daily business execution creates new data all the time (Process A in the illustration). These data are used as input by your data scientists to train automation algorithms (Process B in the illustration).

- If this is done correctly, it creates a virtuous and automated circle, where new data create new model input – and new models combined with data create more business value in the front. Everybody is happy!

Then it goes wrong

However, according to Peter, the loop can break in the middle.

“Sadly, I have seen data scientist-teams working separated from core system teams and core technologies. They just extract data from the core systems, export them to their own data lakes or similar environments, and develop their algorithms in a vacuum. This creates a huge risk, that when they come back to the business, their models can not be implemented, because the data are not available in the core system, or – even worse – create incorrect results.

What I also often see is, that the data scientists modify the data they extract from the system to fit their modelling needs. This is a natural part of algorithm development, but it is also dangerous if the data are born with poor quality, and the data scientists aren’t aware of that. “

Ensure data quality and keep the loop connected

It is critically important, that your core system data are born with high quality at the source. Both for the quality of the algorithm, but also for the purpose of implementation. If data are born with high quality, the algorithms will have higher explanatory power and will be easier to implement, because the modeling data quality will be the same as the core system data quality.

“Last but not least, it is important, that analytics projects are started with the deployment in mind. Don’t let the data scientists just run away with the data into their isolated laboratory, but make sure they see, where their algorithm will be implemented, and what the business purpose of the algorithm is. And let them be involved in the implementation of their algorithm and the monitoring of it after it is moved to production. Then the needed virtuous loop is created, where the algorithms create true business value.” Peter states.